¶ Title: AI and Continuous Threat Exposure Management

¶ Speaker: Jay Bavisi

¶ Company: EC-Council

¶ Are We Ready for 6G? (10 Million Connected Devices per Square Meter)

- Speed of Exploitation – Attackers can scan the entire internet in 2–5 days and exploit zero-day vulnerabilities within that time frame.

- Peripheral Asset Targeting – 70% of last year’s high-severity CVEs impacted QA/UAT/DEV systems, leaving openings for lateral movement.

- Stress & Alert Fatigue – The constant evolution of attack vectors contributes to burnout and missed detections.

- Sophistication of Attack Paths –

- Multi-stage and supply chain attacks are increasingly common.

- Point-in-time scans often miss chained attacks.

- AI & Hyper-Automation by Attackers – Adversaries now use AI to create new attacks in minutes, while manual penetration tests may take months to validate.

¶ Continuous Threat Exposure Management (CTEM)

- Continuous vs. Periodic – 24/7 assessment, not just scheduled scans.

- Threat Aware – Ingests real-time threat intelligence.

- Attack-Path Focused – Chains vulnerabilities to simulate realistic attacks.

- Validation Driven – Uses BAS (Breach & Attack Simulation), CART, or safe live attack testing.

- Risk-Prioritized Remediation – Balances impact vs. feasibility.

Core Capabilities:

- Discovery and monitoring

- Active validation (BAS/CART)

- Prioritized exploitable risk assessment

¶ 7 Deadly Sins of Cybersecurity

- Sloth – Complacency in defense.

- Ignorance – Lack of awareness.

- Gluttony – Collecting data without security.

- Pride – Overconfidence in defenses.

- Greed – Choosing cost over security.

- Wrath – Reactive rather than proactive responses.

- Envy – Imitating others without proper planning.

¶ Humanity 2.0: The Next Frontier of Security and Survival

¶ Speaker: Len Noe

¶ Company: CW PenSec

¶ Human Augmentation

- Medical Enhancements – CGMs, CPAP machines, pacemakers, prosthetics.

- Non-Medical Enhancements – Devices for accessibility and productivity.

¶ AI Replacing Jobs

- 2.5% of jobs overall are susceptible to AI replacement.

- High-income earners face the greatest replacement risk.

- Software development projected to see a 17.9% reduction – compared to “getting a face tattoo” (bad long-term advice).

¶ Dangers of AI Replacement

- AIID (AI Incident Database) – Catalogs incidents directly caused by AI misuse or failure.

- Unvetted AI Models – Most issues arise post-deployment, with nearly a 50/50 split between accidental and intentional incidents.

- “AI models are like drug dealers—except instead of drugs, they’re handing out our data.”

¶ Maintaining Relevance in an AI World

- “There is no SSL for humans.”

- Example: $25M fraud in Hong Kong from a deepfaked CFO video call.

- Identity insurance may soon be as essential as health or auto insurance.

- Biometric bypasses – High-resolution photos and contact lenses can trick retinal scans; fingerprints have been stolen from public appearances.

¶ Augmentation as an Option

- Not mandatory, but helps extend human capability.

- Interfaces allow interaction with high-knowledge technical systems.

- Helps maintain relevancy in a tech-dominated world.

- Raises issues of data sovereignty – where and how personal data is stored.

¶ Implants

- VivoKey – Bridges biological and digital identity.

- Ghost Drive – Implantable 1TB hard drive, subject to HIPAA/GDPR protections.

¶ Opening LLMs to the Public

- Considered both the best and worst development.

- Comparable to a child who knows all the bad words but refrains only because someone said not to.

¶ The Future of Offensive Security with AI

¶ Speaker: Bikash Barai

¶ Company: FireCompass

¶ AI Taxonomy

- Broader than most assume:

- Generative Models → Neural Networks → Machine Learning → Artificial Intelligence.

- Adversarial Search & Attack Planning

- Forward chaining (data-driven) – Predictive modeling.

- Backward chaining (goal-driven) – Attack path planning.

- Classification – Bayes Theorem as the foundation.

- Retrieval-Augmented Generation (RAG) – Combines LLMs with external data.

¶ AI in Penetration Testing

- Data-Driven Discovery – Supervised learning for vulnerability prediction.

- Reasoning & Planning – Graph Neural Networks, reinforcement learning, Monte Carlo Tree Search.

- Generative & LLMs – Used for automation, RAG for grounded outputs.

- Hybrid & Agentic AI – Multi-agent orchestration for discovery, execution, and validation.

¶ Challenges with General AI Models

- Lexical Sparsity – Limited understanding of rare terms.

- Semantic Drift – Misalignment of context.

- Contextual Errors – Incorrect interpretations of data.

¶ Future of Offensive Security

- Vulnerability Assessments (VA) are becoming obsolete.

- Shift from 20% pentesting coverage to 100% asset coverage.

- Move from point-in-time testing to continuous validation.

- From scope-limited pentests to objective-based red teaming.

- From human-driven to AI-augmented human testing.

¶ The Disappearing Junior Analyst: AI’s Talent Time Bomb

¶ Speakers: Paul Farley, Ed Pascua, Brent Hamilton, Aylin Orial

- Brent – Actually likes alert fatigue because it helps identify which technicians are engaged. Some are comfortable reviewing alerts all day, while others focus on fixing root issues. The ability to reduce noise and isolate relevant alerts is crucial.

- Ed – Expressed concern about increased unemployment in early-career positions due to AI replacing junior roles.

- Paul – Asked how removing junior analysts will affect senior technicians who rely on them for growth, mentoring, and handling lower-level work.

- Aylin – Data shows productivity increases of 40–60% at the individual level, but only 1 in 10 companies capture these gains effectively. Juniors are “the backbone of your organization.”

Further Discussion:

- Brent – AI can free technicians to work on higher-value tasks. Many treat AI as a “digital coworker.”

- Paul – Warned that if only a handful of staff adopt AI, the company won’t realize its full productivity benefits.

- Aylin – With AI, juniors can shift into orchestration, compliance, policy, and triage, which frees seniors for risk management and detecting model poisoning.

- Paul – Drew a parallel to the early cybersecurity curriculum: graduates could explain advanced concepts but lacked IT fundamentals (e.g., reading Event Viewer logs). Without mentorship, history could repeat.

- Mentorship Theme – All agreed it’s essential:

- Paul – Leaders must ensure mentorship continues.

- Brent – Supports mentorship but stresses teaching critical thinking, not just technical concepts.

- Aylin – Success metrics should evolve: don’t just count tickets closed, but look at threats resolved and workflows created to improve productivity.

¶ WARNING – Dark Realities of Cybercrime (Audience Discretion Advised)

¶ Speakers: Jesse Tuttle, Reese Tuttle

¶ Company: AP2T Labs

¶ Real-World Stories

- A Lawyer Walks into a Bar – A man landed his dream job but became victim of a romance scam. His partner collected personal photos from Snapchat, then shared them with his colleagues to blackmail him.

- The Cost of Sugar – A business owner doing marketing online hired a third party for promotion. The vendor delivered the service but also injected malware, leading to extortion.

- Beating the Odds – A man received a “betting helper” app on Telegram. Despite following best practices, the app contained malware that launched a man-in-the-middle attack, stealing logins, financial data, and company information.

¶ Broader Threat Landscape

- Pig-Butchering scams – Now the leading form of human trafficking in the U.S.

- 2024 FBI IC3 Report

- Total Complaints: Millions

- Losses: $50.5 billion

- Significant year-over-year increase

- IC3 also reports 65–85% of cyber incidents go unreported

- Psychological Impact – Rarely discussed, but victims often suffer lasting trauma.

- Scam Economy – Money from scams is funneled into illicit markets, sometimes even to “buy” people to sacrifice in ritual scams to increase odds of future fraud success.

- Call Centers – Many overseas call centers do legitimate outsourcing for U.S. firms while also hosting scam operations. Vulnerable employees are sometimes coerced—family members held hostage to prevent quitting.

¶ Good, Bad, and Ugly: Case Studies in Governance of Enterprise Risk

¶ Speaker: Keyaan Williams

¶ Company: Class-LLC

¶ Risk Governance Standards

- ISO 3700 – Governance corporations: purpose, stakeholder accountability, and promises delivered.

- FISMA (2002) – Early attempt at compliance; slow adoption (took over 3 years for full integration).

- NIST SP 839 – Defines risk tiers:

- Tier 1 – Board of Directors

- Tier 2 – C-Suite

- Tier 3 – Operational Level

- NIST Cybersecurity Framework 2.0 – Strong in Tier 3, weak in governance application.

¶ Key Governance Concepts

- Boards have a fiduciary duty to make reasonable decisions with the information available.

- Compliance cannot be the driver—for Fortune 500s, fines are often absorbed as an operational cost.

- Boards must understand accountability chains: who is responsible for action and follow-through.

¶ Case Studies & Lessons

- Equifax – Public data showed that the real damage wasn’t the breach itself, but misallocated resources causing failure in contractual obligations.

- Rite Aid – AI model had to be deleted after discovery of bias because it was deployed without proper validation.

- Blue Bell Ice Cream – Board held accountable for deaths caused by listeria because they failed to consider food safety governance.

- OFAC Example – Payments to sanctioned vendors are illegal; boards can be liable if preventive governance isn’t in place.

- UK Shipping Company – Despite multiple ISO certifications, a weak admin password and uneducated board led to total company collapse.

¶ ISO 3700: 7 Core Principles of Risk Management

- Purpose-driven oversight

- Structured and comprehensive governance

- Stakeholder engagement

- Systemic, long-term thinking

- Internal controls (NIST 855)

- Accountability and transparency

- Continuous improvement (aligned with NIST 8221 / IR 8286)

Final Point: All stakeholders—not just IT—must be cyber-aware.

¶ Security Testing in an AI World

¶ John Dickson

¶ Bytewhisper Security

¶ Traditional Penetration Testing

- Define Perceived Threat

- Conduct Reconnaissance

- Scanning + Manual Testing

- Exploitation

- Reporting + Remediation Recommendations

- Common findings: SQL Injection, Cross-Site Scripting (XSS), Privilege Escalation, Misconfigurations

¶ Traditional Pen Testing (2000)

- Network-centric

- Trusted vs. Untrusted Zones

- Definition of scope varied widely

¶ Traditional Pen Testing (2004–2022)

- Transition to application focus

- Network pen testing became commoditized

- Definition of scope still varied widely

¶ Strengths of Traditional Pen Testing

- Fairly well understood

- Repeatable

- Effective

¶ Weaknesses of Traditional Pen Testing

- Still not a universally accepted definition

- Depth varies

- “One man’s pen test is another’s Nessus scan.”

- Mixed metaphors

- Often treated as a compliance checkbox

- Can be static

- Does not address the additional attack surface that AI introduces

¶ AI Penetration Testing

- Builds upon the traditional network and application baseline

- Organizations are scrambling to test new AI-driven attack surfaces

- Definition of scope varies widely

¶ GenAI Is Creating Code at a Breathtaking Pace

- 78% of respondents report using AI — up 14% from 2023

- AI-generated software introduces a new scope of vulnerabilities

- Creates new attack surfaces

- Manual code review is essential to avoid missing AI-related flaws

- There is a substantial learning curve for cybersecurity leadership

¶ What Makes AI Pen Testing Different

- Concerns with underlying training data

- Non-determinism and randomness of outputs

- Tests must be repeated regularly; evaluating consistency is difficult

- Hallucination — cannot be reduced to zero

- Unintended bias

- Input manipulation

- Explainability (of both the model and the security test)

- New attack surfaces:

- Model Exploitation

- Adversarial Prompts

- Evasion Attacks

- Model Inversion and Extraction

- Prompt Injection and Jailbreaks

- Business and Security Risks

- AI Data Poisoning

- Attackers inject malicious or corrupted samples

- Reduces model accuracy

- Enables targeted attacks

- Adversarial Inputs

- Model degradation

- Targeted misbehavior

- Stealth and persistence

¶ OWASP Top 10 for LLMs (To Be Confirmed)

- Prompt Injection

- Insecure Output Handling

- Training Data Poisoning

- Model Denial of Service

- Supply Chain Vulnerabilities

- Insecure Plugin Design

- Sensitive Data Exposure

- Unauthorized Code Execution

- Overreliance on AI-generated Content

- Model Theft and Extraction

¶ Differences Between Traditional Pen Testing and AI Application Pen Testing

| Aspect | Traditional Pen Testing | AI Application Pen Testing |

|---|---|---|

| Scope | Networks, Applications | Models, Prompts, Training Data |

| Determinism | Predictable | Non-deterministic |

| Methodology | OWASP, NIST | OWASP LLM, AI RMF |

| Risk Focus | Technical | Data and Model Integrity |

| Frequency | Periodic | Continuous / Adaptive |

¶ Application Security Fundamentals

- Input Validation

- Authentication

- Authorization

- Session Management

- Data Protection

- Error Handling

¶ Agentic AI

- AI-powered systems that act autonomously

- Proactive rather than reactive

- Can “reason” about next actions

¶ MCP Testing Approach

- Define Scope → Threat Model

- Validate Authentication / Authorization

- Check Input Validation

- Verify Least Privilege Enforcement

- Validate Package / Supply Chain Integrity

- Consider Resilience

¶ References

- NIST AI Risk Management Framework

- OWASP Top 10 for LLMs

- OWASP Application Security Verification Standard (ASVS)

- MITRE ATLAS

¶ Is Your Budget Hurting Your GRC and Cyber Programs?

¶ Speaker: Troy Vennon

¶ Prosentra Cybersecurity

¶ Are You Funding the Right Initiatives?

- Cybersecurity budgets are often based on company size or available funds — not actual attack surface

- The cost to eliminate all risks is typically far beyond realistic budgets

¶ Risk Motivators

- Financial – Monetary loss, fines, brand damage

- Healthcare – HIPAA compliance and patient safety

- Retail – PCI-DSS and data breach risks

- Energy / Utilities – Operational continuity and national security

- Manufacturing – Supply chain integrity and downtime prevention

¶ Cybersecurity as a Boardroom Language: Translating Risk into Business Impact

¶ Speaker: Rajan Behal

¶ Company: KPMG

¶ The Bridge to Business Resilience

- Cybersecurity is now a core fiduciary duty for boards

- Ultimate accountability for cyber risk rests with the board

- Board members can now face personal liability for cyber incidents

- A communication gap exists — technical severity often lacks financial context

¶ The Shifting Threat Landscape

- Cybercrime is a financial risk with material consequences

- Global average breach cost: $4.88M

- Financial services average: $6.08M

- Breaches of 50M+ records: $375M+

- Attack vectors: Malicious (51%), IT failures (25%), Human error (24%)

¶ How to Report to the Board

-

Governance Strategy

- What is our cyber risk appetite?

- How do we compare to our peers?

- How is ROI on our controls evaluated?

-

Preparedness & Vulnerability

- How do we evaluate our cybersecurity culture?

- How is maturity ranked against frameworks like FFIEC?

- When was our last penetration test and what were the findings?

-

Incident Response

- Are we prepared to contain and recover from major incidents?

-

High / Medium / Low is Not Enough

- “High risk” means little to a CFO without quantifiable financial impact

- Executives speak in terms of ROI and revenue at risk

- The disconnect can lead to misallocated resources

- Case studies help bridge technical and business perspectives

“Boards and executives don’t need to understand technical concepts — they need to understand business impact.”

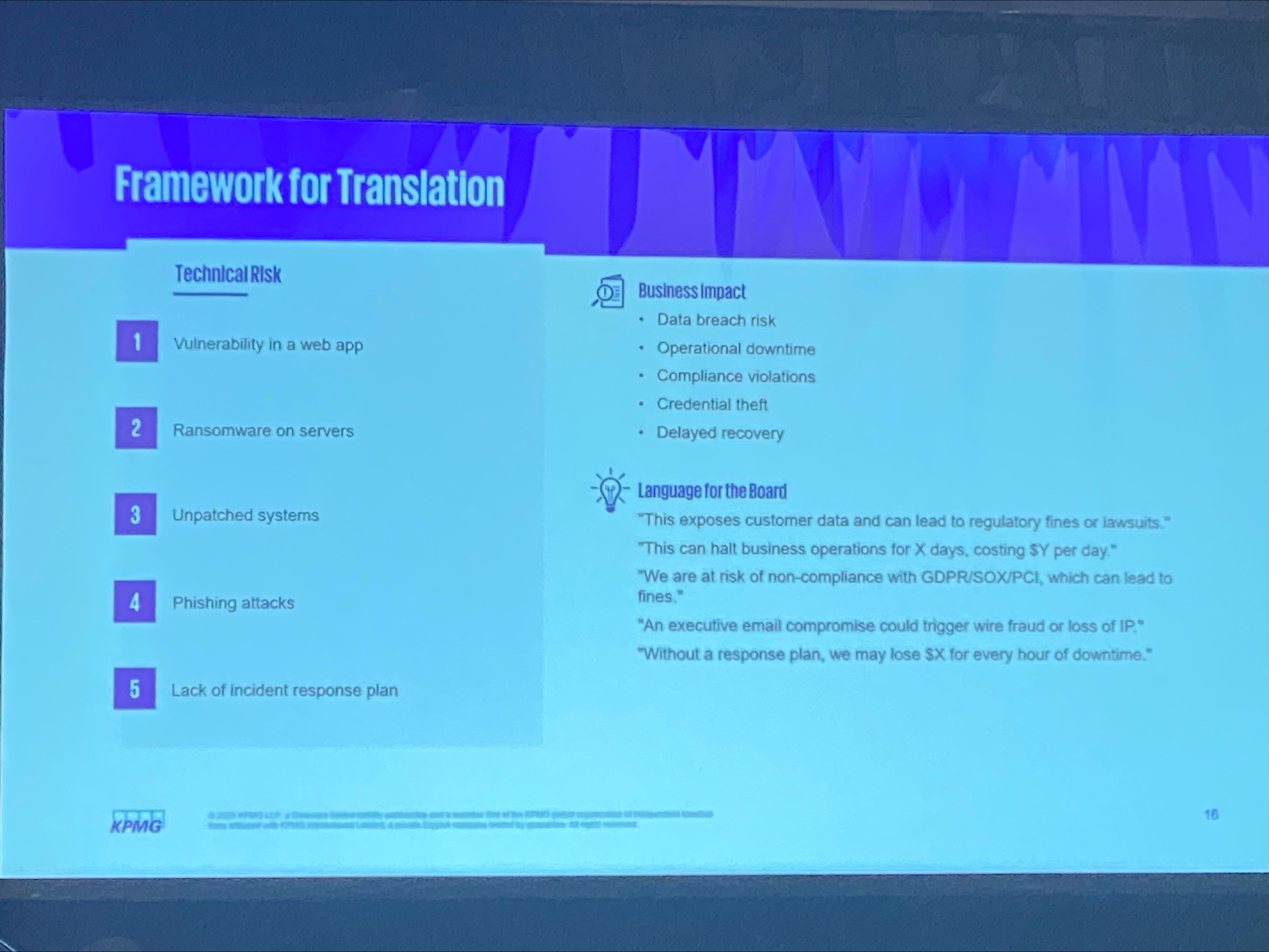

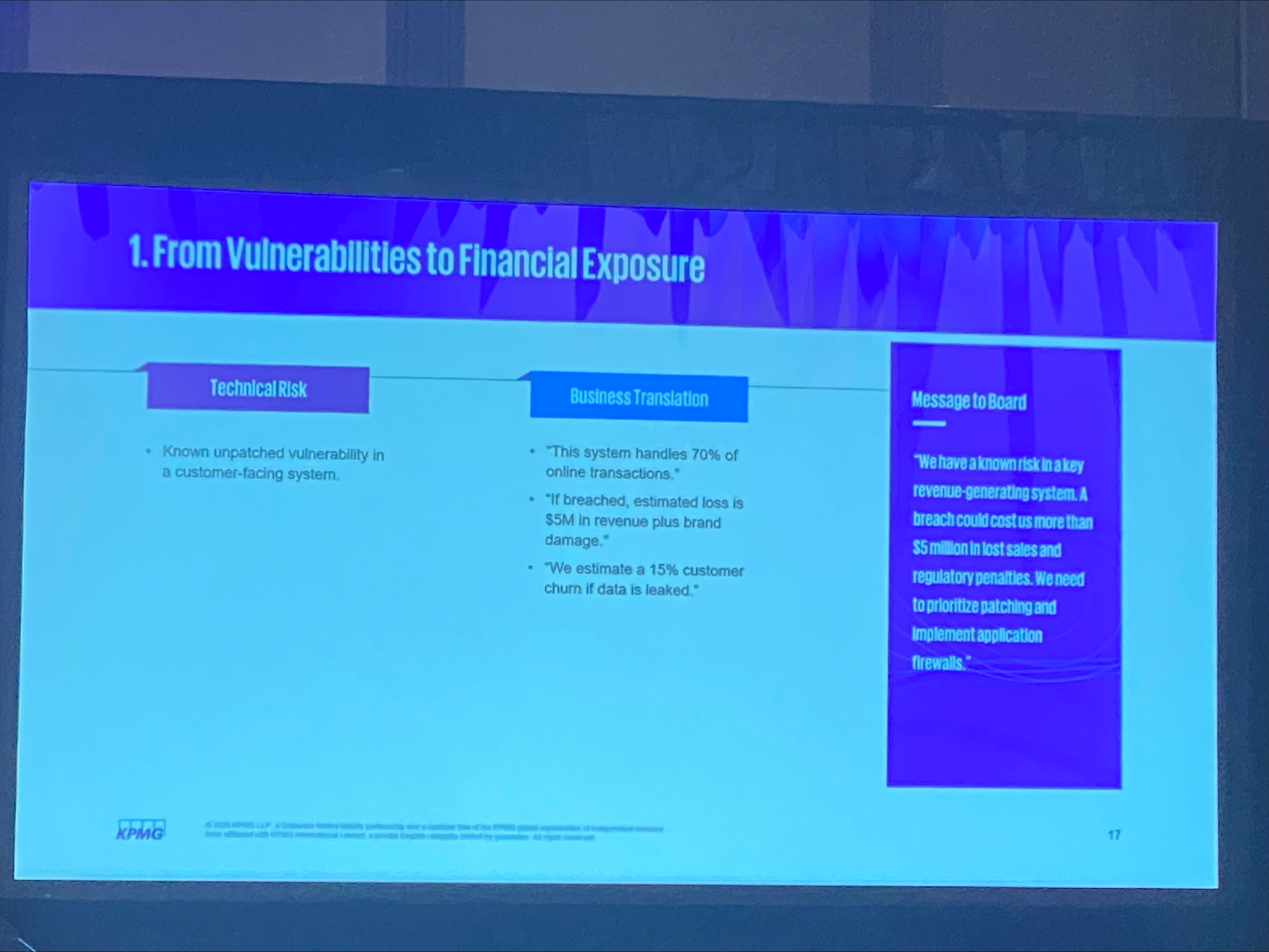

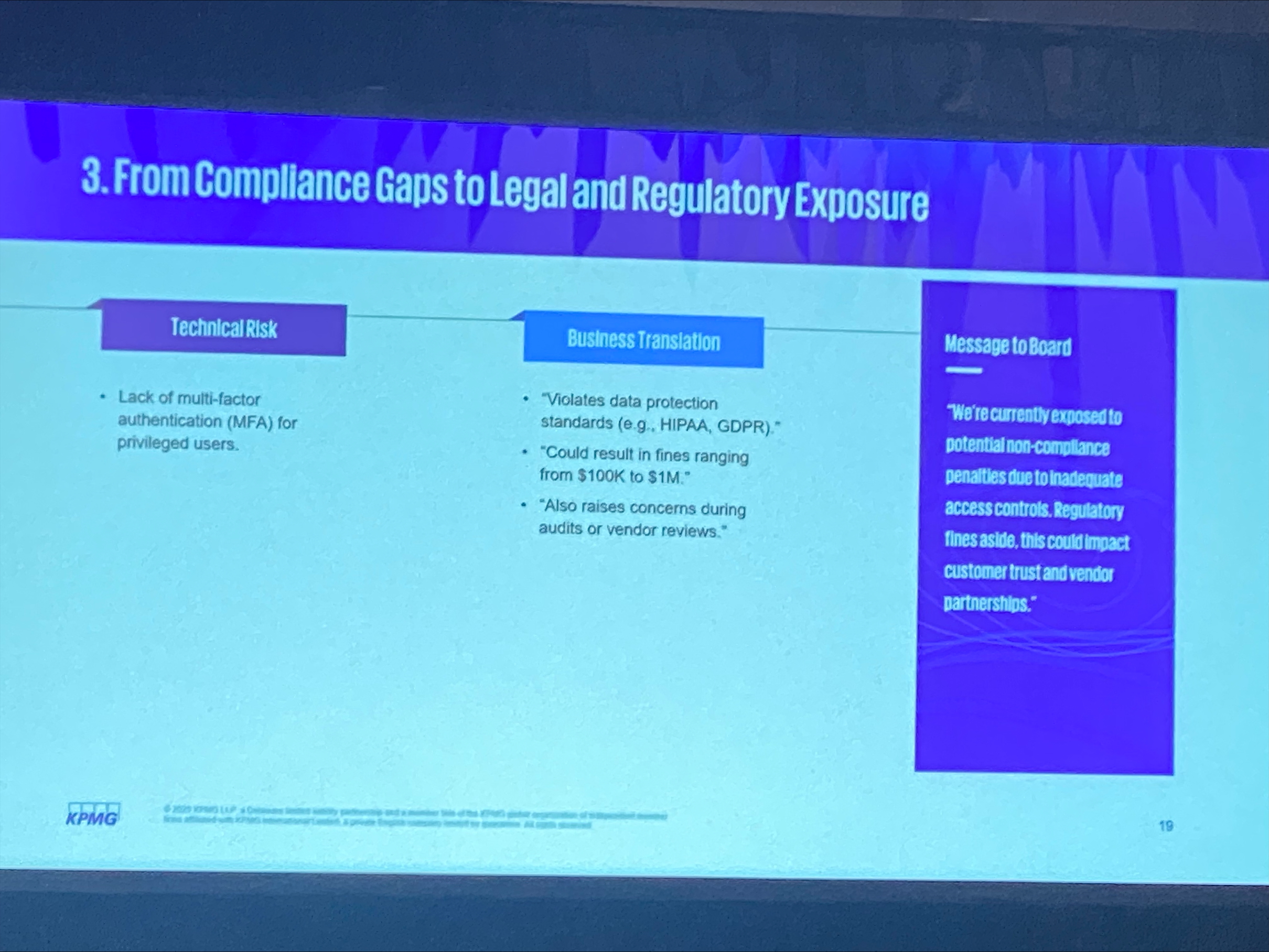

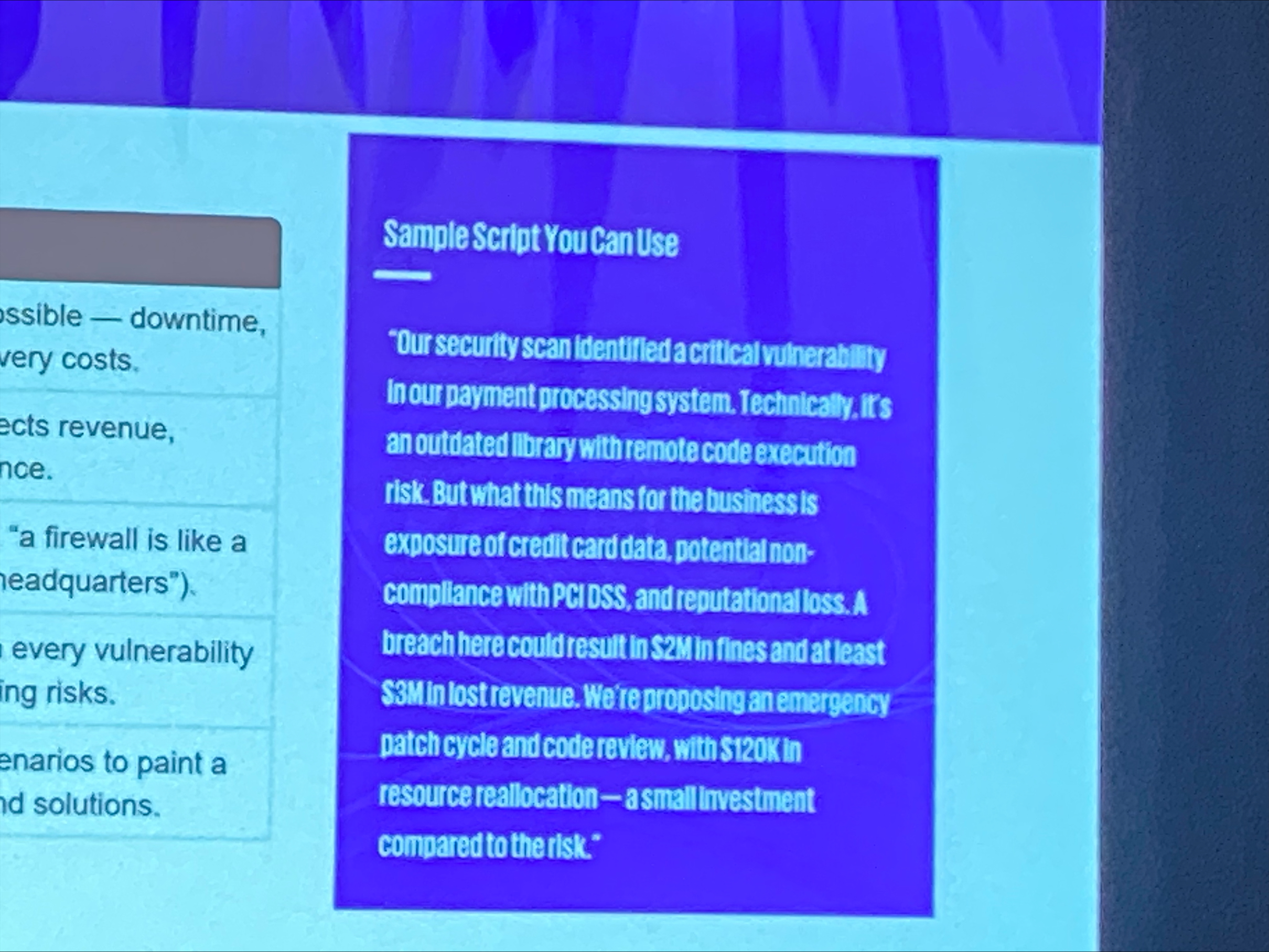

¶ Framework for Translation

-

From Vulnerabilities → Financial Exposure

-

From Compliance Gaps → Legal / Regulatory Exposure

-

Cyber Risk Quantification (CRQ)

- FAIR Model (Factor Analysis of Information Risk)

- Loss Event Frequency (LEF): How often a loss event is likely to occur

- Loss Magnitude (LM): The potential financial impact of a threat

- FAIR Model (Factor Analysis of Information Risk)

-

Tips for Effective Translation

- Use dollar values

- Tie metrics to business functions

- Use analogies

- Prioritize high-impact risks

- Tell a story

¶ The Next Generation of Cybersecurity Metrics

-

Transition from noise to financial metrics

- Vulnerability count → Top 10 risks by financial exposure

- Intrusion attempts → % of business-critical assets exposed

-

Key Dashboard Elements

- Overall risk posture showing declining financial exposure

- Financial visualizations showing likelihood and cost of events

- Interactive drill-downs and simulations

- Regulatory and compliance readiness tracking

-

Quarterly Communication

- Standardize reporting frequency

- Focus on key financial risk shifts

-

Key Takeaways

- Implement a quantitative framework

- Shift to value-based metrics

- Build a strategic dashboard

- Establish quarterly communication

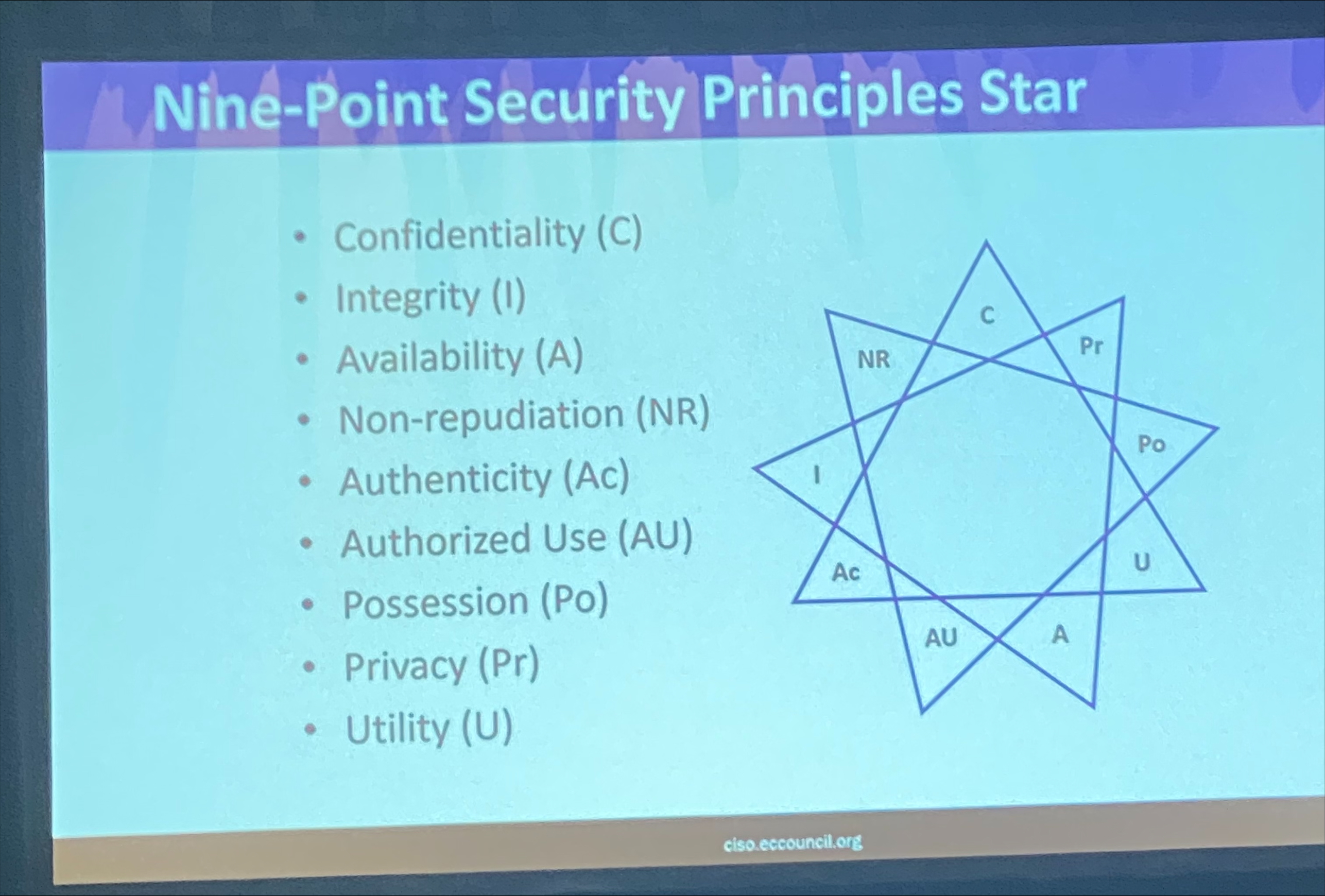

¶ Beyond the CIA Triad

¶ Speaker: Jim West

¶ The Nine Security Core Principles

- Confidentiality (C)

- Integrity (I)

- Availability (A)

- Non-Repudiation (NR)

- Authenticity (Ac)

- Authorized Use (AU)

- Possession (Po)

- Privacy (Pr)

- Utility (U)

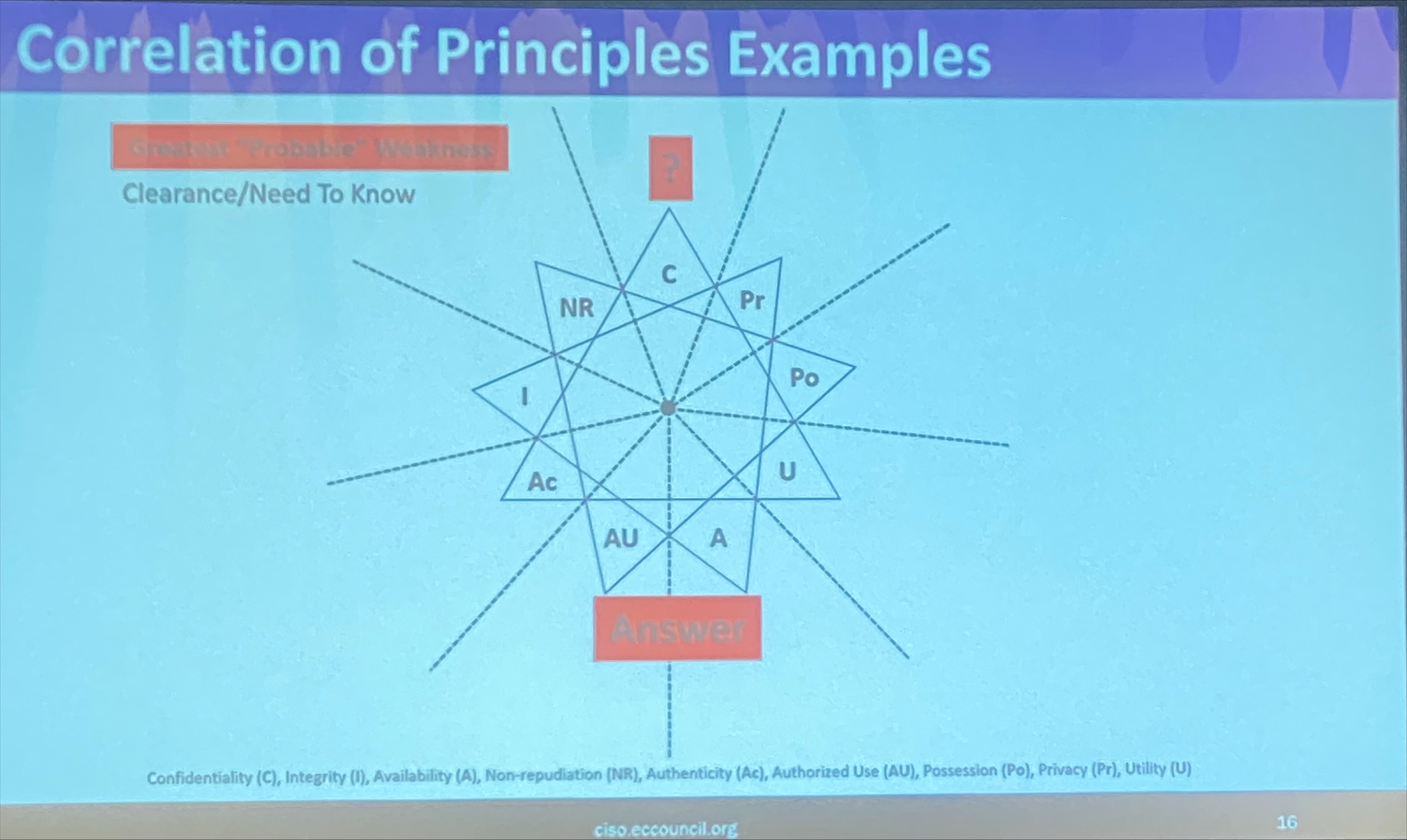

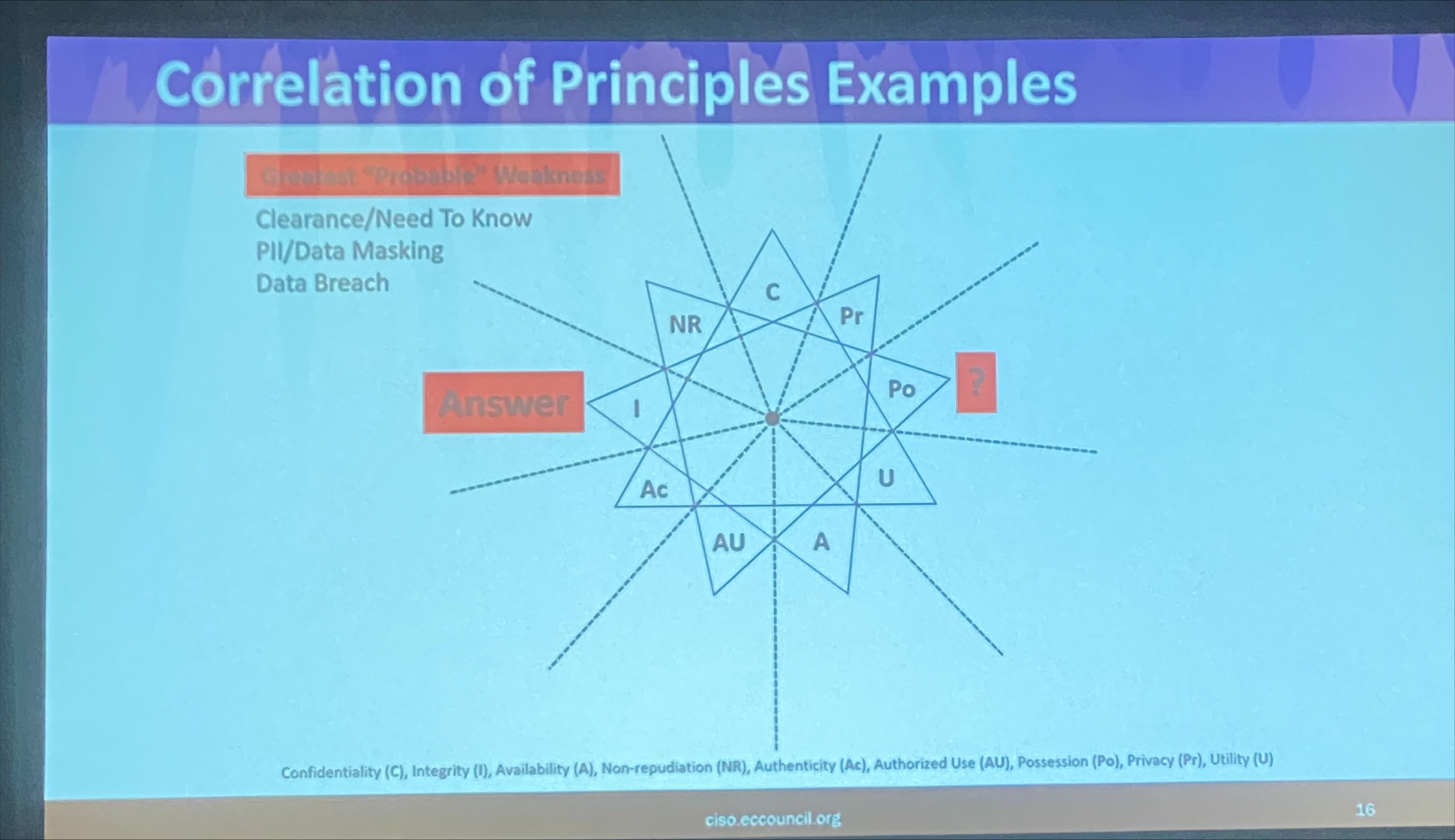

¶ The Nine-Point Security Principle Star

- Correlation of Principles

- The star’s endpoints show relationships, not opposites

- Correlation does not imply causation

- Example: DD Form 2875 (“System Authorized Access Request”) aligns with five of these nine points

¶ A Perspective on Security

- “Perspective is not fixed — it evolves over time.”

- Printers pose a possession risk because they convert binary to cleartext, effectively releasing data control.

- Auditor’s Perspective:

- Risk measurement should consider existing controls and countermeasures.

- Reveals gaps in achieving organizational security goals.

- VPN and PKI apply to all three CIA triad principles:

- Network / Public → Availability

- Private / Key → Confidentiality

- Virtual / Infrastructure → Integrity

Recommended Reading:

- Research papers on inherent vulnerabilities in PKI